Drawing with Code Interpreter

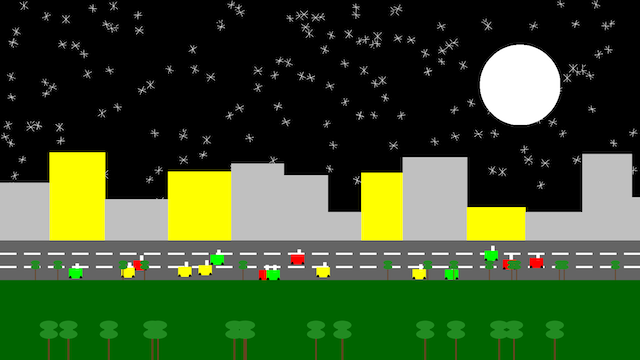

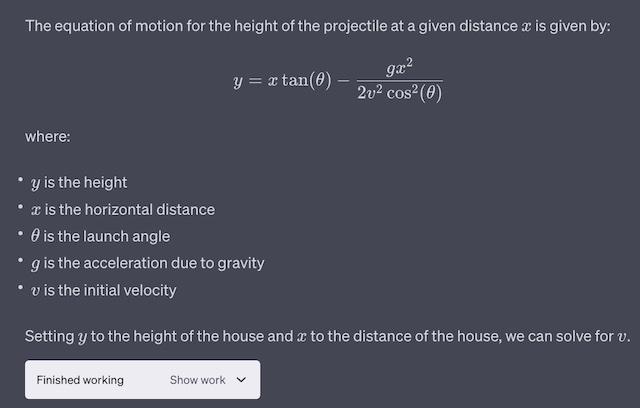

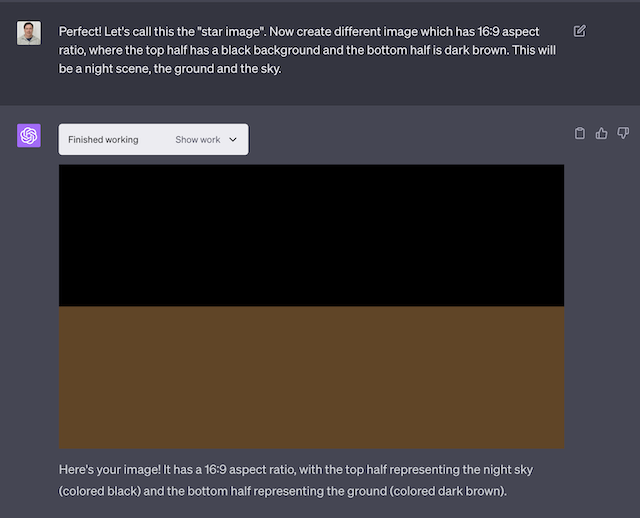

While Midjourney and Stable Diffusion can produce stunning images, I recently started playing around with a quite a different style of AI Art. Mine looks a bit more, um, primitive. Here’s an AI Art image I call City Scene:

I created the above masterpiece using GPT-4 Code Interpreter and the Pillow library. Code Interpreter is a ChatGPT interface to a Python notebook. A notebook in this context is a running Python interpreter with multiple blocks of code. So instead of just spitting out a block of code for you to copy-n-paste into some other system, ChatGPT will actually run the code for you. By default the code is hidden and it just displays the resulting text or image. But you can click to view the code if you want.

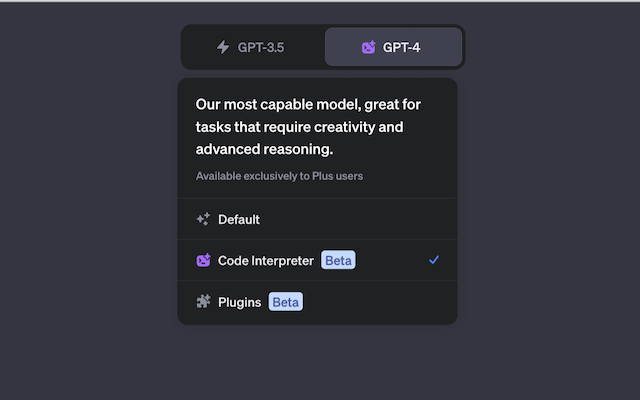

To try it out, select the Code Interpreter option under the GPT-4 button:

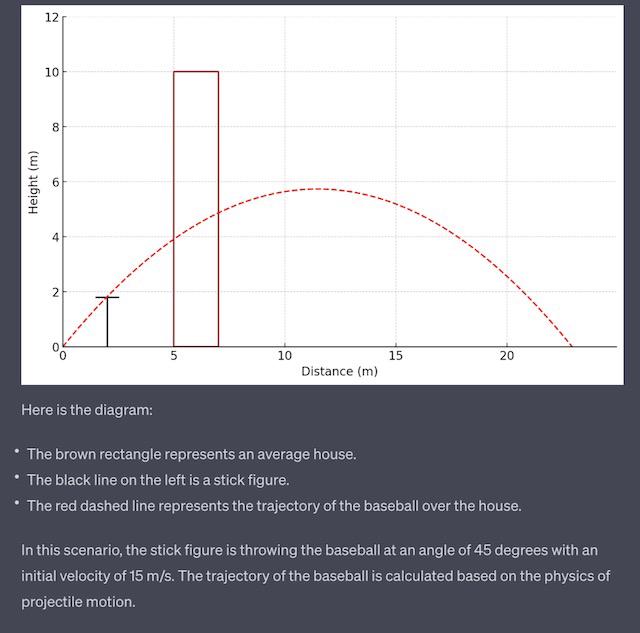

For my first attempt at drawing with Code Interpreter I asked ChatGPT to draw a person throwing a baseball over a house. This is what it came back with:

I thought this was really funny. It used matplotlib which is primarily for

making graphs, and it’s not half bad given that severe limitation. But I wanted

something a bit more figurative, so asked if it could use some other method to

draw pictures, and it said no!

Unfortunately, in this environment, I have access to Python-based tools only, and the available drawing libraries like matplotlib are not designed for detailed artistic illustrations. They are more suited for creating plots, graphs, and simple geometric diagrams. Creating a detailed illustration such as a house with a roof and a chimney, or a stick figure in a way that resembles hand-drawn images is beyond their capabilities.

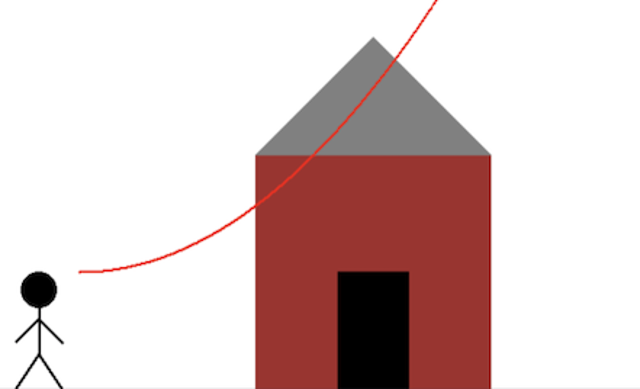

Curious, I asked ChatGPT to list all installed packages and Pillow, a fork of the Python Image Library (PIL), caught my eye. I knew it had some simple drawing commands, so I asked ChatGPT to draw the same baseball scenario using Pillow:

It seems like ChatGPT lied to us, it can draw! This is not uncommon with LLMs: you can coax them into doing things. This is why chain of thought reasoning works so well, as well as prompt-injection and “jail breaking”.

I liked the stick figure and the house, but of course the baseball’s path is wrong. I tried for a while to get the baseball arcing over the house. ChatGPT even did a fair amount of math:

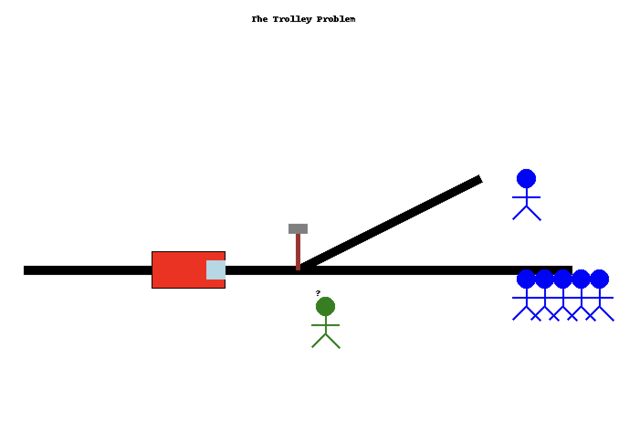

But it never came up with a realistic looking path. Eventually I gave up on the baseball scenario and moved on to other drawings. I created a version of the trolley problem:

Some prompts I used while iteratively creating the trolley image:

That’s good, can you back up the “trolley” so that it has some track before it hits the fork? And give it a little windshield or something to indicate which way it’s going.

Windshield is good. But you didn’t really back up the trolly. It should be straight track, then trolly, then more straight track, THEN the fork.

Good. Now a new person whose observing the scene with question mark over their head. Also make sure there is a nice white boarder around the drawing, yours is up against the right side of the image. Also write in text “the trolly problem” as like the drawing title.

Here’s the final trolley problem code it produced:

# Create a new image with white background

image = Image.new('RGB', (800, 600), 'white')

draw = ImageDraw.Draw(image)

# Draw the title

draw.text((350, 20), 'The Trolley Problem', fill='black')

# Draw the track

draw.line((100, 300, 700, 300), fill='black', width=10) # Main track

draw.line((400, 300, 600, 200), fill='black', width=10) # Forked track

# Draw the trolley

draw.rectangle((trolley_x_center - trolley_width//2, trolley_y_center - trolley_height//2,

trolley_x_center + trolley_width//2, trolley_y_center + trolley_height//2),

fill='red', outline='black') # Elongated trolley

draw.rectangle((trolley_x_center + trolley_width//2 - windshield_width, trolley_y_center - windshield_height//2,

trolley_x_center + trolley_width//2, trolley_y_center + windshield_height//2),

fill='lightblue') # Windshield

# Draw the people

for i in range(5): # Five people

draw_stick_person(650 + i*20, 320)

draw_stick_person(650, 210) # One person

# Draw the lever

draw.line((lever_x, lever_y, lever_x, lever_y - 50), fill='brown', width=5) # Lever handle

draw.rectangle((lever_x - 10, lever_y - 50, lever_x + 10, lever_y - 40), fill='grey') # Lever base

# Draw the person next to the lever

draw_stick_person(lever_x + 30, lever_y + 50, 'green') # Moved next to new lever position

draw.text((lever_x + 20, lever_y + 20), '?', fill='black') # Question mark over person's head

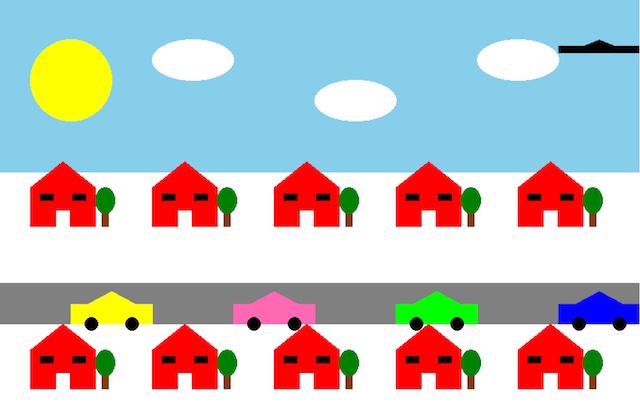

image.show()Next I created this neighborhood scene:

Some prompts used here:

That’s a good house and tree. Now draw a street with 5 houses above it and 5 houses below it, all just smaller versions of this house and tree.

That’s good but the houses are too close to each other. I can’t really see the trees. Make the houses smaller so there is space between them to see the trees.

That looks really good. How about a car driving down the street.

Add 3 more cars: yellow, pink and green

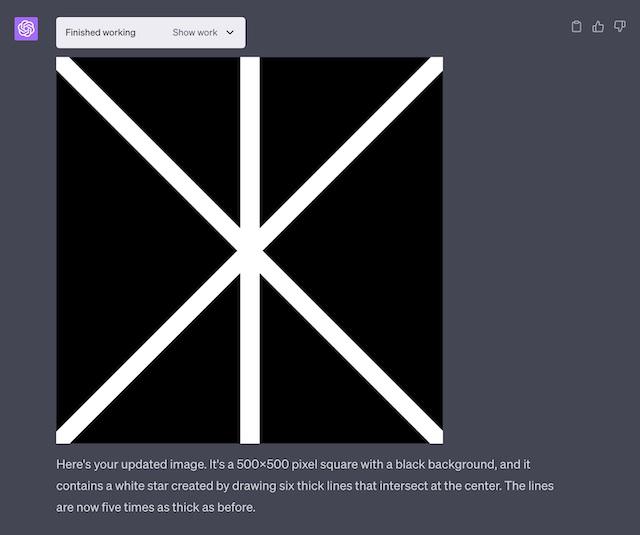

For the neighborhood I just asked ChatGPT to draw multiple copies of the houses, trees, and cars. But Pillow contains a powerful feature: you can create multiple images and then composite them together. So I could create an image of a house or car in isolation, and then paste multiple copies of it into the main scene. To create City Scene the first thing I did was create an image and draw a very simple star into it.

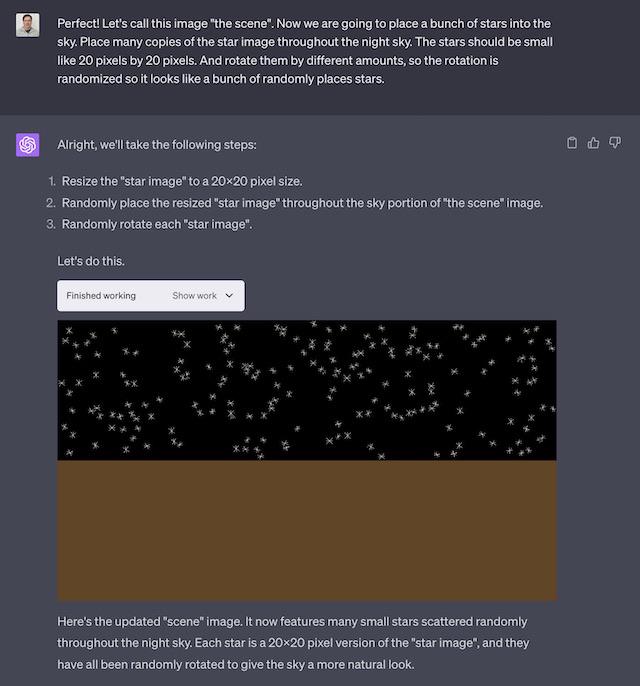

Then I created a background:

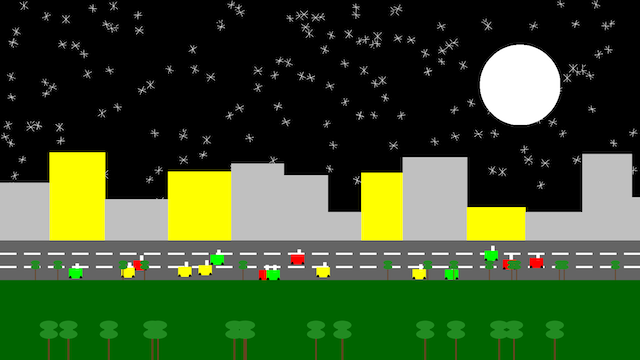

Finally, I asked it to place the stars:

I liked the result. I found its phrase “to give the sky a more natural look”

interesting. I didn’t actually say “natural” in my prompt, but it’s certainly

what I intended. Its generated code is very clean and easy to read, with nice

comments. Here’s the code placing the 200 stars using the random.randint

function and the Image.rotate, and Image.paste methods:

import random

from PIL import ImageOps

# Define the number of stars

num_stars = 200

# Resize the star image to 20x20

star_resized = img.resize((20, 20))

# Loop to place stars

for _ in range(num_stars):

# Randomize the location

x = random.randint(0, img_size_16_9[0]-20)

y = random.randint(0, img_size_16_9[1]//2-20)

# Randomize the rotation

angle = random.randint(0, 360)

star_rotated = star_resized.rotate(angle)

# Place the star on the scene

img_16_9.paste(star_rotated, (x, y))

# Display the image

img_16_9.show()I used this same approach to create separate images for the cars and trees. For

best results ask it to create a background image with alpha=0 and then draw

your object with alpha=255 so that the sprites have transparent backgrounds.

Although you are describing the scene in English, knowing some terms like

alpha lets you communicate your intentions compactly.

Here is the final image again. I think you could produce a much higher quality images than this if you patiently and repeatedly used the composition technique in clever ways.

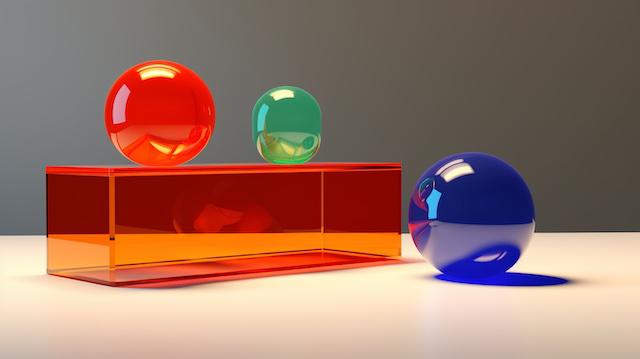

Drawing with Code Interpreter is a very different experience from using Midjourney or Stable Diffusion, because GPT-4 actually understands language! When you prompt a current AI Art system you are really providing a “cloud of keywords”, every word has associations which alter the final image, but the AI does not really understand the way the words relate to each other. To illustrate the dramatic difference in language understanding here’s a prompt I gave to Midjourney:

A sideview of a table. On the table are 3 shapes: red cube, blue cube and green cube. On the red cube is a yellow sphere. On blue cube are two orange cones. On the green cube are 3 purple spheres. –v 5.2 –ar 16:9

And here is the resulting image:

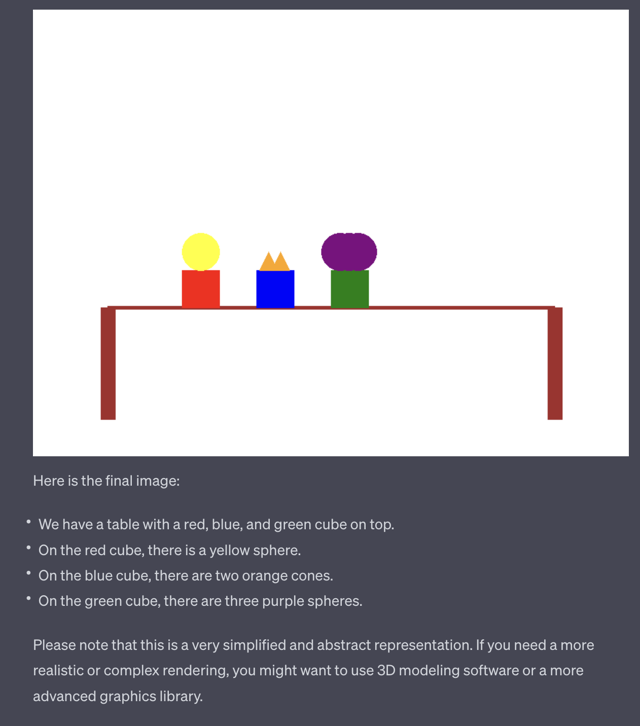

Nothing in this image matches the description, except arguably there is a table. Feeding the same prompt to GPT-4 Code Interpreter I got this:

While the art style is simplistic, everything is arranged how I asked. I wonder where the limit is. I’d like to see someone create a super-long prompt where ChatGPT draws everything correctly.

So why can’t they just combine the language understanding of GPT-4 with the image synthesis of Midjourney or Stable Diffusion? This is one of the hottest topics in the field: Multimodal Machine Learning. Once really good multi-modal models exist you’ll be able to using arbitrary human language to create and then refine photorealistic images. That will be amazing.

You might draw a person and then iterate on specific details, “On her left wrist place three bracelets, the first bracelet is gold with a single emerald charm, the second bracelet is silver, etc.” Draw a kitchen scene and then say “near the toaster on the counter add a blue Kitchen Aid 5-Quart Artisan mixer, but an older one with a dented bowl” and it will draw that perfectly.

The underlying technology of artificial neural nets is advancing rapidly. While AI Art systems today can produce stunning images, operating them requires a hit-or-miss selection of keywords. LLMs are excellent at language understanding and human language is compact and expressive. We will soon see multi-modal systems which combine advanced language understanding with high-quality image synthesis. Going forward, advanced language understanding will be part of most AI systems, because it’s so useful and powerful.